Blog

The Perils of AI-generated Content – Part 1

Three Reasons Why AI-generated Content Alone Is Not an SEO Strategy for Cybersecurity

When it comes to AI-powered writing tools, I can’t help but think about Pandora’s Box. Unable to resist curiosity, Pandora opened the box only to release troubles upon the world before shutting the lid on the ‘Spirit of Hope.’ Hope remaining in Pandora's box serves as a vital benefit for all mankind—but does it not come with a caution in that if one just clings to a ‘Ray of Hope,’ there is a risk that one may never take action to improve one’s lot.

Similar to Pandora’s unleashed troubles, AI-generated content is certainly ‘out of the box,’ but unlike the troubles and lucky for us, it offers huge benefits to marketers for creating content at scale. With AI-powered writing tools like GPT-4, marketers can now generate blog posts, email copy, social media posts, entire articles, website copy, and more in mere seconds with just a few prompts. What’s not to love? But, just like Hope from Pandora’s Box, doesn’t AI-generated content also come with a caution in that there are risks to your SEO performance?

Considering that 75% of searchers don’t scroll past the first page of search results, understanding how well AI-generated content is likely to perform on Google and other search engines is table stakes when it comes to favorable SEO results.

While Google has clarified that it doesn’t automatically penalize AI-generated content, Google does have long-standing guidelines against auto-generated content that provides little value. When it comes to ranking, Google uses algorithms to determine the quality and relevance of content, and prioritizes pages that can demonstrate expertise, authoritative language, or trustworthiness. In other words, the uniqueness, relevance, and usefulness of the content will determine how it performs.

While AI language models have become virtually ubiquitous, the content they produce is still far from perfect and can present SEO challenges for three reasons:

1. Lack of EEAT Signals

One of the primary ways Google’s human reviewers assess content is with the EEAT (Experience, Expertise, Authoritativeness, and Trustworthiness) framework. AI output on its own is unlikely to send strong EEAT signals for three reasons:

- No personal experience: AI-generated content has no first-hand experience on the topic it’s writing about. It cannot produce the kind of tried-and-tested content that establishes credibility.

- Questionable expertise and authoritativeness: Most AI content is a rehash of information already published online. While AI can mimic authoritative writing, it lacks the unique opinions and insights that demonstrate real subject matter expertise.

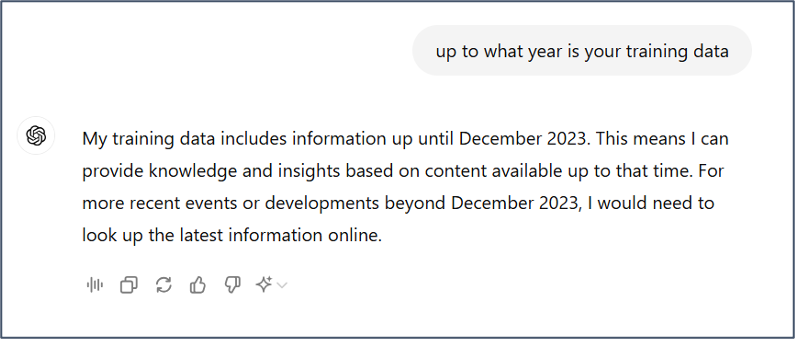

- Accuracy issues: AI models can sometimes "hallucinate" incorrect statements or mix up details—especially on topics requiring specialized knowledge. This is problematic for Your Money or Your Life (YMYL) situations where accuracy is paramount, such as the case of a New York attorney who relied on a conversational chatbot to conduct his legal research. Turns out that the chatbot not only made up six bogus precedents, it even stipulated they were available in major legal databases. Further, because AI language models are not connected to real-time data, AI-generated content may inadvertently spread misinformation due to its inability to fact-check sources. For example, ChatGPT is trained on data through 2023.

Source: ChatGPT-4

2. Shallow or Irrelevant Answers

There’s no doubt that AI writing tools excel at producing well-written responses, but oftentimes the information is only surface-level or off-the-mark. Because AI content can misuse words and fail to pick up on nuances, responses run the risk of being redundant, tangential, or irrelevant to the search query.

3. Potential plagiarism

AI systems like GPT-4 ingest massive datasets scraped from public sources like books, academic papers, websites, and more—absorbing this content without regard for copyrights, attribution, or plagiarism risks.

Cybersecurity SEO Requires Human Expertise

Generative AI certainly has its merits. There’s no arguing that it is an incredibly easy to use tool for planning content, concept ideation, and rapidly scaling SEO content creation—but it’s not a shortcut to higher SEO rankings when it comes to cybersecurity. In fact, low-quality, confusing, factually incorrect or copied content could be demoted or removed entirely from search results.

Security IT professionals are likely the most suspicious and difficult audience to reach. Standing out in a competitive market and getting the attention of the cautious security IT pro requires the subject matter expertise and industry authority that only humans are capable of providing. Bottom line, you’ve got to build trust with your audience. Humans have information that goes beyond algorithms and know how to vet information and present compelling, factual, valuable content in ways that resonate with and meet the needs of security IT pros.

Content creation, however, is often the Achille’s heal of cybersecurity marketing teams in that it requires translating complex cybersecurity concepts into engaging, accessible narratives—demanding deep technical knowledge and constant updates to stay relevant.

If your internal subject matter experts are spread very thin and/or your existing contractors aren’t technical enough, don’t despair. We have your back. With deep subject matter expertise in virtually every cybersecurity discipline, CyberEdge's content creation consultants don’t just lighten your content creation load—they help you crush it!

Contact us today for a personalized consultation!

Be sure to check out part two of this blog where we take a look at AI hallucinations and drill down into what they are, why they happen, and why it matters.